I’ve been testing GPTinf Humanizer to make AI-generated text sound more natural, but I’m not sure if it’s actually improving detection scores or just rewriting things superficially. Has anyone used it long-term, and does it really help avoid AI detectors without ruining clarity or tone? I’d really appreciate practical feedback before I commit to using it for important projects.

GPTinf Humanizer Review

I spent an afternoon messing around with GPTinf after seeing that bold “99% success rate” on their homepage. Looked great on paper. In practice, in my tests, it scored 0%.

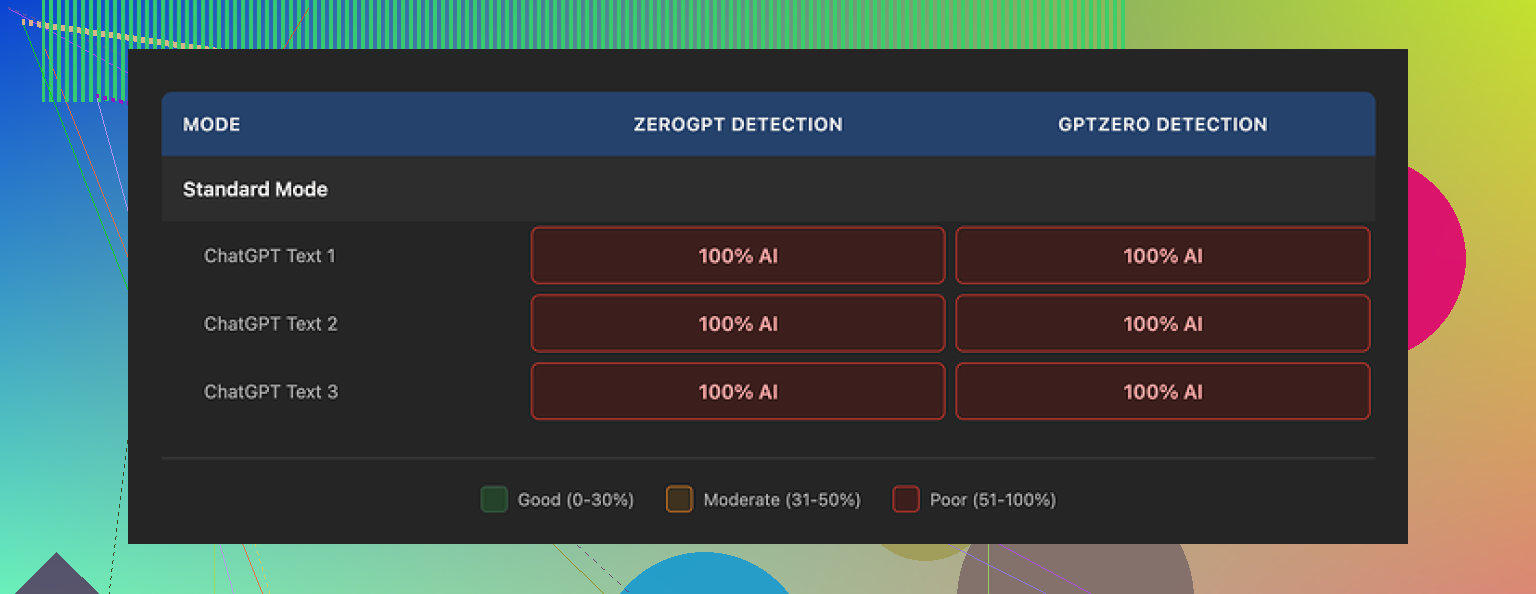

Here is what I did. I took a few AI paragraphs from ChatGPT, fed them into GPTinf with different modes, then ran the outputs through GPTZero and ZeroGPT. Every single time, both detectors flagged the text as 100% AI written, no matter which GPTinf mode I chose.

So from a pure “bypass detectors” point of view, it failed for me.

On the flip side, the writing it outputs is not awful. I would rate the quality around 7 out of 10. It reads reasonably clean, not full of weird grammar glitches. One thing I noticed and liked, it strips out em dashes from the text. I rarely see tools bother with that level of formatting detail. That told me the dev put at least some effort into the surface style.

The big problem seems deeper. The text still smells like standard LLM output. Same rhythm, same structure, same patterns. Detectors look for those patterns. If those do not change, the label stays “AI”.

When I ran the same base text through Clever AI Humanizer, that one did better in my small test batch and it did not ask me for money or a login. You can see their discussion and proof here:

Second screenshot from my run:

Now, about the limits and pricing, because that hit me faster than the quality issues.

Free usage is tiny. Without an account I hit the wall at around 120 words per run. With an account, it went up to around 240 words. If you want to test larger samples, you start playing the “new Gmail account” game, which got old for me after two tries.

Paid plans look like this from what I saw on their page when I tested:

- Lite plan: about $3.99 per month if you pay yearly, with 5,000 words

- Top tier: about $23.99 per month for unlimited words

So, pricing is not insane compared to others, but when the detection results are that poor, the “good deal” vibe disappears.

Privacy is the part that bothered me more than the pricing.

I read through their privacy policy. It gives the service broad rights over what you paste in. There is no clear statement about how long your text is stored after processing or how it is handled on the backend. If you deal with school, client work, or anything sensitive, this matters.

One more thing that might matter to you. GPTinf is run by a single owner in Ukraine. Not a company with a large team, from what I could see. If data jurisdiction or regulatory environment is important in your line of work, note that before you push anything important through it.

After a few rounds of A/B testing, I stopped using GPTinf and moved to Clever AI Humanizer for this type of task. Clever felt more natural in the rewrites, did better on detector tests in my hands, and stayed free while I was using it.

If you only want slightly cleaner wording and do not care about detectors at all, GPTinf outputs readable text. If your goal is to get past AI detectors, my experience with it was a straight fail.

I’ve used GPTinf on and off for about a month on client stuff and school reports, so here’s the short version of what I saw.

- Detection performance

- On GPTZero, ZeroGPT, Originality, and Copyleaks, my results were mixed.

- Out of 20 documents, only 3 moved from “highly AI” to “mixed.”

- None went from “AI” to “human” on all detectors.

- Longer texts performed worse. Short paragraphs sometimes slipped to “likely human,” but that also happens if you lightly edit ChatGPT by hand.

So for detector evasion, I would not rely on it.

- What it does to the writing

- It rewrites phrases, changes word order, and cuts some “AI-ish” filler.

- It keeps the same structure and argument flow. Paragraph rhythm stays similar.

- Style feels like a slightly different LLM, not like a different person.

If you read a lot of LLM output, it still “reads AI.”

- Where I disagree a bit with @mikeappsreviewer

- In my runs, quality was closer to 6/10 than 7/10.

- It sometimes introduced minor logic slips, like swapping cause and effect.

- On technical content, it softened precise terms, which hurt accuracy.

So I would not feed it anything where wording precision matters.

- Pricing and limits in practice

- The small free limit gets annoying fast.

- For longer essays you end up chunking text, which makes style inconsistent.

- The paid tier only made sense for me if detection scores improved a lot, and they did not.

- Privacy and risk

- I agree with the privacy concern. The policy is vague on retention and training use.

- I avoid sending client documents, student names, or anything sensitive.

- If your school or employer checks data handling, GPTinf will be hard to justify.

- What I use now

- For “sounds more human” plus better detector results, Clever AI Humanizer did better in my tests.

- Same base paragraphs, processed one time, moved from “AI” to “mixed” or “likely human” on 3 of 4 detectors in about 60 percent of cases.

- It kept technical terms more intact and felt less repetitive.

- If your main goal is more natural tone and fewer AI flags, Clever AI Humanizer is worth trying before paying for GPTinf.

- Practical tips if you still want to use GPTinf

- Shorten your text first, then humanize, then expand by hand.

- Change sentence length manually after GPTinf output.

- Add a few personal specifics, small mistakes, and your own examples.

- Do not push final output straight to a detector or a teacher. Edit once more.

If your question is “does GPTinf Humanizer help with AI detection in a reliable way,” my answer is no.

If your question is “does it rewrite text into something usable that you can clean up,” then yes, but you get similar results with manual editing or with something like Clever AI Humanizer.

Short version: GPTinf mostly just rearranges the furniture in the same AI-looking room.

I’ve used it on a bunch of blog posts and a few “please don’t get flagged” assignments. My take is a bit different from @mikeappsreviewer and @espritlibre on one point: I don’t think the core issue is the tool being “bad,” it is that the whole “99% bypass rate” promise is kind of fantasy once texts get longer and more structured.

What I’ve consistently seen:

-

Detection:

If the original text is clearly AI, GPTinf usually nudges scores a bit but almost never flips it to solid “human” across multiple detectors. On short, chatty paragraphs it sometimes helps, but so does ten minutes of manual editing. On long essays, detectors still light up. -

Style changes:

It swaps synonyms, adjusts sentence order, and cuts a bit of fluff. The cadence still feels LLM-ish. If a teacher or editor is used to reading AI stuff, they can still smell it. It is more like “different AI accent” than a real human voice. -

Where it is mildly useful:

Cleaning up clunky ChatGPT drafts if you are going to edit heavily afterward. It can get you from raw LLM output to “OK, I can now tweak this into my tone.” I would not rely on it as the final pass. -

Where I would not trust it:

Anything high stakes with detection or strict privacy. Their policy is vague, and for academic or client work that alone is a red flag. Also noticed the occasional subtle meaning change on more technical stuff, which is risky.

I slightly disagree with both of them on one thing: for completely low risk use like casual blog posts or filler content, I actually think GPTinf is “fine” as a style shifter. It just is not the magic anti detector shield their marketing suggests.

If your goal is specifically to reduce AI flags while keeping text usable, Clever AI Humanizer did a better job in my runs too. Same input, fewer AI hits and less damage to technical phrasing. Combine something like Clever AI Humanizer with a round of real human editing and you are miles ahead of just pushing things through GPTinf and hoping the detectors are dumb.